6 Short Reasons Why I Am Now a Skeptic of So-Called 'Artificial Intelligence'

My series exploring AI applications this year now comes to a disappointed conclusion. I'm no longer "All in on AI" and here's why.

It has been two and a half months since the last installment in my series this year about exploring how to use artificial intelligence technologies creatively.

I had concluded the last piece with ways I was researching co-writing articles, essays, fiction, and even autobiography with AI:

For those just starting this series, the beginning is here, inspired by the major update to ChatGPT’s image generating program in the spring:

Here are some of the other installments in the series:

And where am I now with this?

Well, I’ve given up on it and have now instead gone in the opposite direction.

Instead of using AI to help me write, now I’m trying to show explicit proof that I’m writing myself, including revealing typos and stops and starts in handwriting.

Here are some of the experiments I’ve been doing on YouTube. Here’s three hours worth of video of me writing by hand over the course of several mornings. Enjoy:

So yes, this is the tentative result right now: I’m skeptical about the utility of so-called AI programs today. And here are some short reasons why, which I’ll unpack in future pieces investigating this in greater depth:

The outputs are currently too factually unreliable, and I do not see how it is possible for LLM (large language model) technology to fix this innate problem of it simply “guessing” what the “correct” answer is to a problem when that is what it’s programmed to be doing. These aren’t “hallucinations;” they are not “bugs” in the system. These are intentional lies and they will serve them up to users and programmers alike, even if told directly not to do that.

I am dissatisfied with my experiences on three freelance AI-training writing gigs from two different AI-centric companies. I have seen what goes into the sausage and now am hesitant to take another bite. I cannot recommend to any other writers right now that they should try an AI writing gig, since my experiences have been so universally negative.

The outputs will not follow direct instructions given, whether from the user or the programmer, thus yielding outputs which can be harmful regarding antisemitism, racism, sexism, misogyny, mental health, and other real-world dangers.

Given these factual shortcomings and unreliabilities in maintaining safety standards, it becomes very difficult, both morally and ethically, to justify the extreme levels of pollution, power usage, and other hidden externalities which the technology requires.

The term “artificial intelligence” appears to primarily be a marketing term rather than something concrete and objectively definable. Technology entrepreneurs use it because it invokes science-fiction happy feelings and sounds more exciting than the proper name for the technology: an LLM, which is absurdly boring. I’m going to start putting “artificial intelligence” in quotation marks and excessively appending the qualifier “so-called” in front of it.

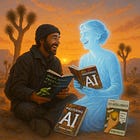

The primary reason I got into using LLMs was to create marketing materials for our book publishing company: to create book covers, internal illustrations, and Substack essay art quickly, cheaply, and effectively. The AI art is genuinely “better” than what a whole lot of humans can do. But how effective as a marketing device can LLM-created images and videos become if they start to become pervasive, potentially to the point of provoking a backlash? Rather than AI-created marketing imagery drawing people in, it instead ends up repelling them.

So which is the way to go? “Pro-AI” or “anti-AI?” In which direction would you bet your business, career, and family’s financial future? This is potentially the next big ideological divide coming for the next generation. As the Baby Boomer-centric Left vs Right, Liberals vs Conservatives paradigm continues to crumble, then this question of where to stand on the Big Bet of so-called “artificial intelligence” arises next.

And yet, I do not take the “anti” position, because as stated in point 5 above, there doesn’t actually seem to be anything there to take a stance against. “Artificial intelligence” is an illusion — it is a puppet, a marionette, a talking dummy that is simply very sophisticated. AN IDOL. ChatGPT is more like a very sophisticated video game or a very expensive novel with an infinite number of uncredited authors. This is just the PR spin of a millennial who has come up with the ultimate Choose Your Own Adventure book—well done, Sam Altman.

I take the Skeptic position now on matters of alleged “artificial intelligence.” Should we never use a computer program that chooses to market itself as “AI?” Should we never use LLM-based technology? Is it OK to use one company’s AI programs but not another?

I don’t know anymore. But it’s time to find out. After more than a year of AI-boosterism and experimentation to figure out how to use these tools to try and build our publishing company, it’s time to slow down and assess further if this is really going to be a useful path forward or if further rebellion against it is going to be necessary. It may turn out that, over the next five years, there will be more wealth to be made in betting against the big-technology industry rather than in favor it.

Right now, it seems as though this middle-path approach is appropriate. Some “AI” companies and programs may operate in an ethical way and might produce products that can be helpful. We’ll have to take them one at a time as they come.

I am staying open-minded as I now approach this question as a journalist conducting an investigation.

Where are you in your experiments with these technologies?

David, I agree with much of what you're saying. Learning and discovery should almost always be slow and tedious with plenty of mistakes and those wonderful, satisfying moments of epiphany. Read, take notes, and then write like a madman!

I want to know more about #2 because I think I applied for one of those but I never onboarded because the pay was low and I didn't think it would be worth my time as a side hustle.