AI: When Myth Meets Reality

We're Living In the Age of Artificial Intelligence, Whether We Like It or Not!

Like those who lived through the 1950s and 60s, we are in an age that was prophesied in the days of old. In those latter decades of the 20th century, human society experienced the beginnings of the Space Age with the launch of mankind’s first orbital satellites. This was the precursor to man leaving our planet and stepping on the moon. Prominent works of literature predicting humans taking to outer space go way back. Important examples include Johannes Kepler’s Somnium (1634) and Jules Verne’s From the Earth to the Moon (1865). Even earlier, as far back as the 2nd Century A.D., Lucian of Samosata’s True History is considered the first known work of fiction to depict humans traveling into outer space.

As these works exemplify, space travel had a long-standing place within mankind’s imagination. Yet, it was never a concrete reality until the 1960s. Suddenly, going up and out of that great dome of our sky was no longer merely a fictional activity, but another marvel of the ingenuity of mankind. Truly, the places to go had now expanded beyond our little planet. What a time it was to be alive.

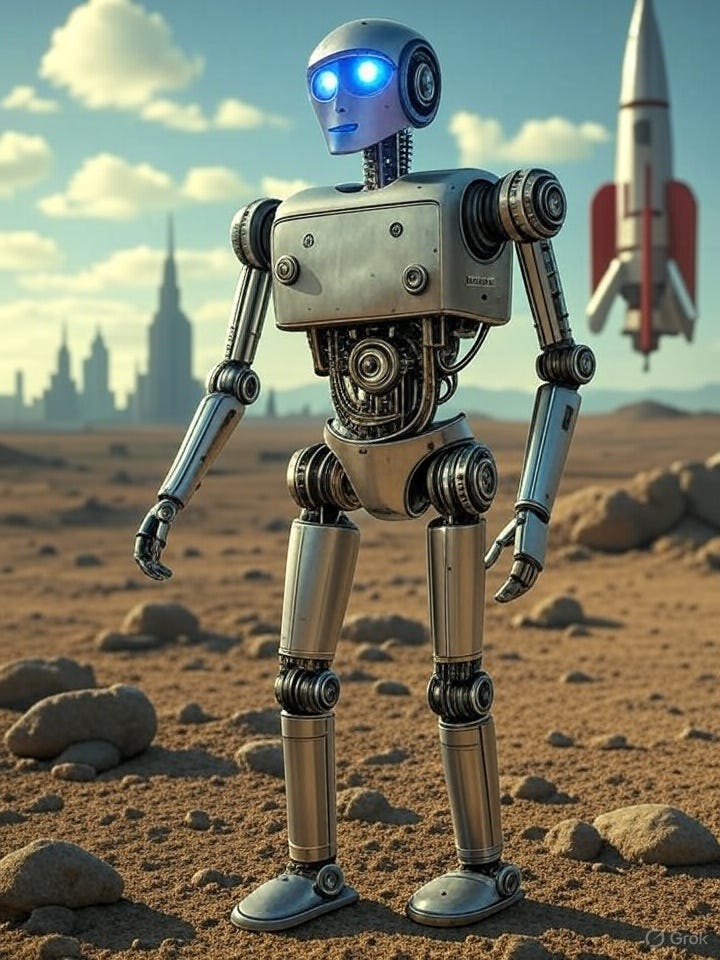

The same could be said for our own age, which surely history will characterize as the beginning of the Age of Artificial Intelligence, or AI. Just like space travel, AI has certainly been a part of our imagination for a long time. In our literature and mythology, examples of AI-like creatures appear as automatons or machines. In ancient Greek mythology there was Talos, the giant bronze protector of the island of Crete. There are many other examples, such as the Golem of Jewish folklore and False Marie from the 1925 novel, Metropolis by Thea von Harbou, to name but a few.

Yet, as with space travel, it was not until the 20th century that the start for AI computing began. While computing models came out in the 1930s, AI technology started in the 1950s, beginning with the framework devised by Alan Turing’s “Computing Machinery and Intelligence.” While practical applications were deployed in the mid-1950s, the first commercially available application came out in 1980, with XCON, which was “a rule-based expert system designed to assist the Digital Equipment Corporation (DEC) in configuring VAX computer systems.”

Yet, the explosion of AI technology really started two decades into the 21st Century, our present day. Many mark it with the launch of ChatGPT in late 2022, which led to the number of available AI tools multiplying from the hundreds to thousands. This was all due to the advances in “Large Language Models (LLMs), transformer-based models like GPT-3 (2020) and GPT-3.5 (2022), and generative AIs, such as image generators.”

According to the AI known as Grok, “As of 2025, approximately 5,000 to 20,000 AI systems are publicly and commercially available for use by consumers and businesses, including chatbots, virtual assistants, generative AI tools, and enterprise solutions.”

The AI explosion has already occurred.

By the way, if you hadn’t already suspected, the history of AI in this article comes to you via Grok. Researching a topic, I must admit, was always a tedious journey for me. I’m showing my age here, but such tasks in my college days necessarily involved using index cards, the Dewey decimal system, and feeding microfiche film through a magnifying lens machine in the bowels of the library.

I don’t miss that at all.

Since the mid-1990s the internet and search engines like Google made that a lot easier. By using the correct search terms, you could get a list of websites with information, but it was up to you to go to those sites and do the synthesis and analysis. Not anymore. For this article, for example, I wanted to do a survey of how AI characters were portrayed in literature and media (this will be in part 2, by the way). However, I decided to do a quick history of the technology first before getting into the meat of my topic. I asked Grok a number of questions, all saved at an internet address for my quick access, https://x.com/i/grok?conversation=1935034198932267016.

In short order, Grok helped me with the background information for the article, something I would have done via Internet research. But even better, by asking the right questions in the right way, I got not just the history of AI in reality and in literature, I also got an analysis with justifications for each conclusion and a summary of each analysis. It was up to me than to pick and choose what I wanted to feature in my article.

What a change for the better—no more tedious research!

While Grok didn’t write the article for me, it probably could have if I had wanted it to. If not Grok, then another AI. And this is where the troubling aspects of AI begin.

At What Price?

Like all new revolutions in technology, it will come at a price. Automobiles replaced horses, diesel engines replaced steam locomotives, factories replaced farms. All of this came at a human cost. As we are in the very beginning stages of the AI age, we do not yet have a feel for how many humans could be replaced by AI in the workforce. The truly troubling aspect of this is that AI has the potential to replace all of us.

What need have we of factory workers when AI robots can do it all? The same for farming. Yet, also, dare I say it, AI could replace all of the information “laptop” jobs many of us now have. In business, what need have we of software developers, of actuarials and underwriters, information technology security, of strategy planners, of sales and proposal writers? The company executives could eventually get all that from an AI.

Even in human fields like medicine, there will be an impact. For example, who needs a radiologist if an AI can do the same work for a lot less money? Will the rich have their robot nannies to bottlefeed their babies and change their diapers? How about the arts? Can AI write the greatest novel of all time, and paint the best pictures? The same could be said for the military. With forms of AI replacing them, soldiers, sailors, and airmen no longer have to die needlessly in battle. Yet, without that human cost factored in, how much easier will it be to start a war? (It will then be robots fighting one another … the best tech wins.)

If all of us are replaced by AI, what is there left for us to do? If AIs are doing all the work that humans used to do to earn money, how will we feed and clothe ourselves? What would that do to consumerism and the economy in general? We spend money on consumer goods and commodities—AIs don’t need any of that. What will happen to the middle class? If no one is earning or spending money, who’s going to pay for the AIs?

Will there be a super-rich royalty of elites and executives who run the AIs? Will the rest of us just subsist in our tenement housing? Will the AIs eventually take over, even taking the place of the elites? And then, will AIs have exclusive control over our military and nuclear weapons?

These are but a summary of the pressing questions involved with the future of AI.

Regardless, while powerful and potentially dangerous, it must be said that AI itself is a morally neutral technology. Unfortunately, the same can be said of a nuclear bomb. One can argue that, if used only for tactical reasons against enemy combatants in a just war, a nuke could be used morally. It’s the troubling potential for using it for evil that is the problem.

As with nuclear weapons, the genie is already out of the bottle for AI, whether we like it or not. Given that, we must be watchful and concerned about any conceivable negative impact to humanity. Our new Pope is certainly wary. As popes do in these latter days, Pope Leo XIV chose his name in honor of his predecessor Leo, who in the late 1880s took on the industrial revolution. Shortly after his election, the new pope explained. “I chose to take the name Leo XIV... mainly because Pope Leo XIII in his historic Encyclical Rerum Novarum addressed the social question in the context of the first great industrial revolution. In our own day, the Church offers to everyone the treasury of her social teaching in response to another industrial revolution and to developments in the field of artificial intelligence that pose new challenges.”

I’m glad the pope has taken on this challenge. We should not be luddites, but we must be concerned about imposing limits to AI. As such, for every new possible application of this technology (replacing our police force with AI robots, for example) we should always determine whether the positives for humanity outweigh or cancel out potential negative impacts. In other words, will it cause more harm than good?

But even above such strictly utilitarian concerns, we must acknowledge that our work provides meaning to our lives. We are creatures made to have occupations. The goal for AI should always be to assist us in those occupations, not replace us. Wisdom should guide us, not the constant calls for greater profits, speed and efficiency.

This takes me back to my starting premise for this article, which will be in part two:

Have the prophets of literature and media shown us our own future if we are not wary of the power of AI?

With our real concerns about the use of AI in mind, this article will continue with a survey of AI characters in literature and media. Using a sampling provided by Grok, we will review AI characters characterized as bad/evil, good, and also morally neutral ones. As with the history above, Grok provided lists of characters within categories along with justifications for placing them in those rankings. Most of Grok’s choices were correctly determined, I discerned, but I did disagree with certain ones. And there were some gaps, some missing characters that are important to include.

I’ll get into that next time. Check back here soon!